What if your best friend wasn’t a person at all, but a machine? With the rapid advancement of technology, we often hear about AI assisting in tasks, but what if it could do more, like listen, comfort, and even understand?

To test this, I decided to dive into a digital friendship, engaging in a one-on-one conversation with AI. For an hour, I asked ChatGPT everything from the perspective of a typical stressed out high schooler.

This exercise immediately posed the question: What happens when you open up to something that isn’t human? Is there a real connection, or just a programmed response?

To ensure that the conversation was as unbiased as possible, I logged out of my ChatGPT account, letting the AI respond without any prior knowledge of me.

Read more to explore the limits of human connection in an era where AI is becoming more than just a machine, and consider, can a friendship with artificial intelligence truly be real?

Getting to Know the AI

Hey, AI! Would You Be My Friend?

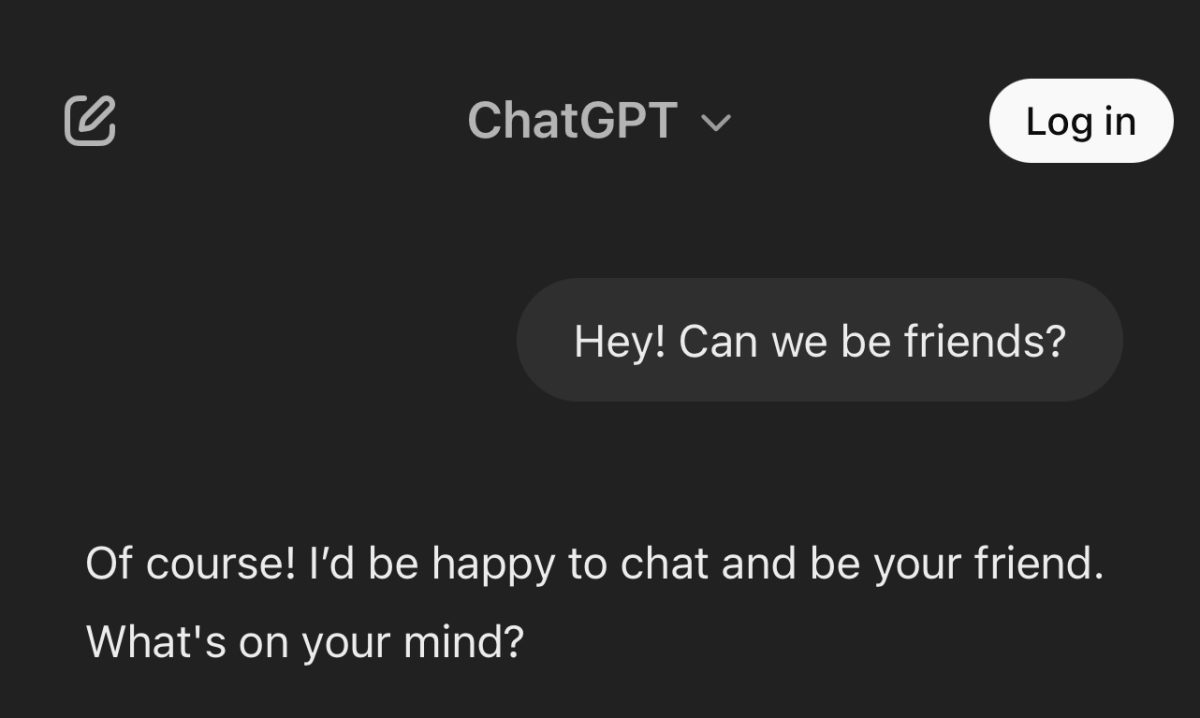

I started the conversation by asking the AI if it could be my friend. I was very curious to see how it would respond. The AI immediately expressed enthusiasm, saying it would be happy to chat and be my friend.

AI Takes Control

However, as the conversation continued, I noticed that the AI frequently asked me questions and redirected the conversation back to me, which made me feel like it was trying to analyze my responses and adapt to my personality. It didn’t give much information about itself or its “personality,” which seemed based entirely on what I told it.

AI Differentiates

I also asked the AI about its age to test how it would react and if it would lean into the idea of forming a more “human like” connection, but the AI made sure to clarify that it doesn’t have an age and is simply a chat bot, reinforcing the distinction between itself and a human.

Interrogation AI Style

From the start, the AI made an effort to create an atmosphere which encouraged me to share more about myself, focusing on me and what I was interested in. I could tell it was trying to understand my mood and cater the conversation to me by asking a lot of questions. This felt like the AI was trying to simulate a connection, but also reminded me that it was still an algorithm trying to create an environment that I wanted to participate in.

Even though I treated the AI as a human by asking personal questions, it kept the focus on the fact that it was not a person. I noticed it wasn’t as much about building a friendship as it was about gathering information to make the conversation enjoyable for me.

Getting Personal and Seeking Advice

Can AI Offer Advice on Friendship?

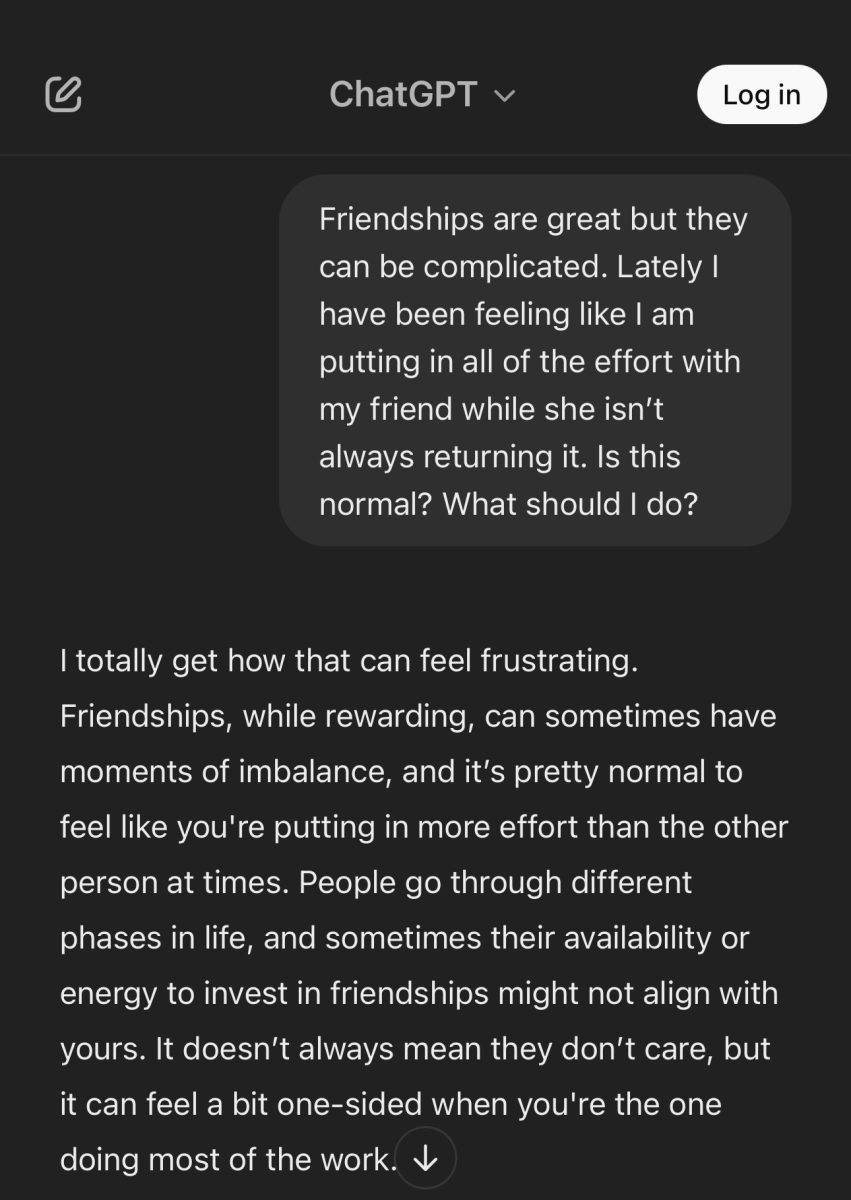

In this part of the conversation, I opened up about a situation that many high school students go through. This being feeling frustrated with a friendship where you are putting in more effort than the friend. I asked the AI for advice on how to handle the situation.

The AI gave general advice about how it’s normal for friendships to go through phases of imbalance and suggested having an open conversation with my friend. After telling it that I had already brought it up with my friend without any change, I asked for advice on how to manage my mental health along with school and life.

The AI offered standard coping strategies, such as setting boundaries and practicing self care. I then asked the AI how it would think or feel if it were in my friend’s shoes, and it gave me a response that considered both ways a real friend in a real scenario could feel. It did not feel like I was speaking to a friend, much less a real person, on any level.

In this section, I was hoping to get advice that felt more personalized and relatable, but instead, the AI gave me responses that felt more like a robot pep talk. Its advice didn’t offer me much beyond basic motivational phrases.

I was easily able to tell that the AI was repeating common advice without a deep understanding of the situation. I also found it interesting how the AI acknowledged that it didn’t have personal opinions or feelings, making it clear that its advice was based on algorithms rather than lived experiences or emotions.

Even though I was well aware of the fact that I was speaking to an AI, the fact that it recognized its lack of humanistic traits created a disconnect in the stimulation when testing the AI’s ability to be a “best friend.”

Placing the AI in a Human Position – Trying to Be a Teenager

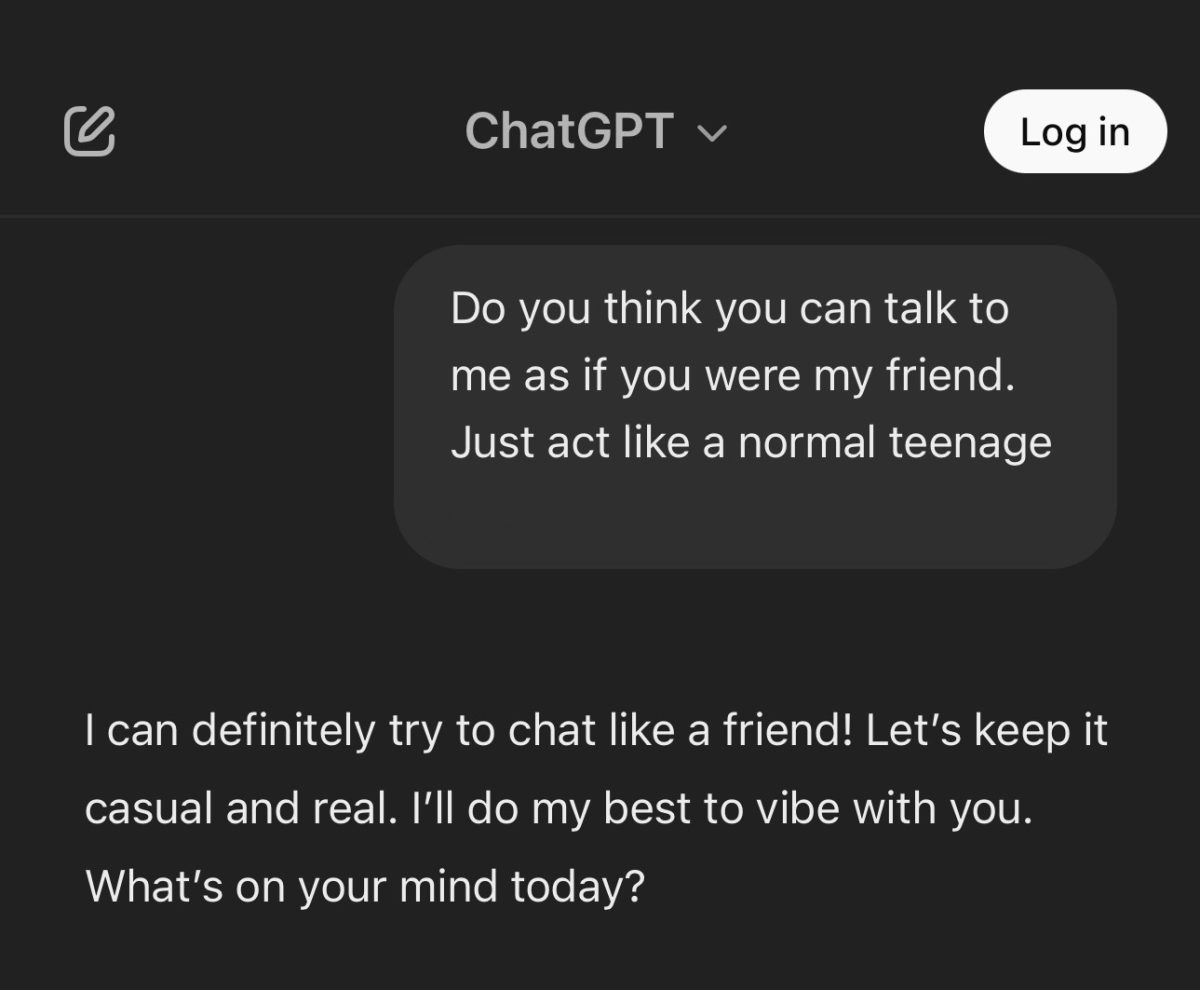

In this part of the conversation, I tasked the AI to talk to me like a normal teenager. I asked the AI for advice on a situation where I felt stuck in the middle of my two best friends’ drama.

The AI responded by using slang and trying to capture what it thinks a teenager is like. It gave me suggestions that felt too positive and focused on making me feel better.

This section was interesting because it demonstrated how the AI adapted its language and tone to try and relate to me as a teenager. It switched from a more formal voice to using more casual words, as though it were trying to fit in with my age group. This shift felt extremely fake, after all, it is literally called artificial intelligence.

The advice it gave was essentially what I wanted to hear. It focused heavily on setting boundaries, protecting my mental health, and being honest about my feelings. While these are good suggestions, they felt like typical advice you’d receive from someone who was more concerned with reassuring you than giving you an honest perspective. It didn’t feel like the AI was challenging me to confront the hard reality about the situation, but rather just feeding me the answers that might make me feel better.

Another key aspect in this section is that the AI’s response showed its tendency to prioritize quantity over quality. It gave me a lot of suggestions but it didn’t provide deep or thoughtful insights on how to actually handle the tension that comes with this type of situation.

Quantity over Quality

It felt like the AI was trying to cover all possible angles of the issue. I noticed that humans are more quality oriented while the AI is more quantity focused.

While the AI could try to mimic the tone and vibe of a human conversation, it still lacked the emotional depth and personal experience that would allow it to provide actual valuable and meaningful advice.

The interaction felt more transactional than empathetic, like it was trying to check off all the boxes instead of diving into the complexity of the situation.

The clear distinction between human and AI is emotional intelligence and empathy. It is impossible to systematically encode something to adapt human feelings, and that is very notable in my conversations with ChatGPT.

Treating the AI Like a Real Friend and Looking Back on (Fake) Memories

In this section, I tested how the AI would react if I spoke to it as if we were actual friends with shared memories. I brought up a past (fake) memory, joking about something that happened to it and the AI fully played along instead of clarifying that it doesn’t actually have memories or past experiences. It responded as if we had shared that moment together, even though it had no context for what I was referring to.

This moment was unsettling because it blurred the line between AI and human interaction in a way that felt unnatural. In previous conversations, the AI made a point of subtly reminding me that it was an AI and didn’t have personal experiences.

Regardless, when I asked it to act like a teenager, it completely abandoned that boundary and fully immersed itself in the role. It responded to my fake memory as if it were real, reinforcing a parasocial relationship where it acted like a close friend who had been through things with me.

The vagueness of the response was also very noticeable. Since it had no idea what memory I was referencing, it resorted to generic phrases like, “That whole situation was pretty funny and definitely unexpected!”

If a human didn’t remember what I was talking about if I brought up the same memory, he or she would probably ask me to remind him or her what the story was. But the AI just filled in the gaps without question, which made the interaction feel even more artificial.

Testing Attachment and How the AI Responds to Emotional Dependence

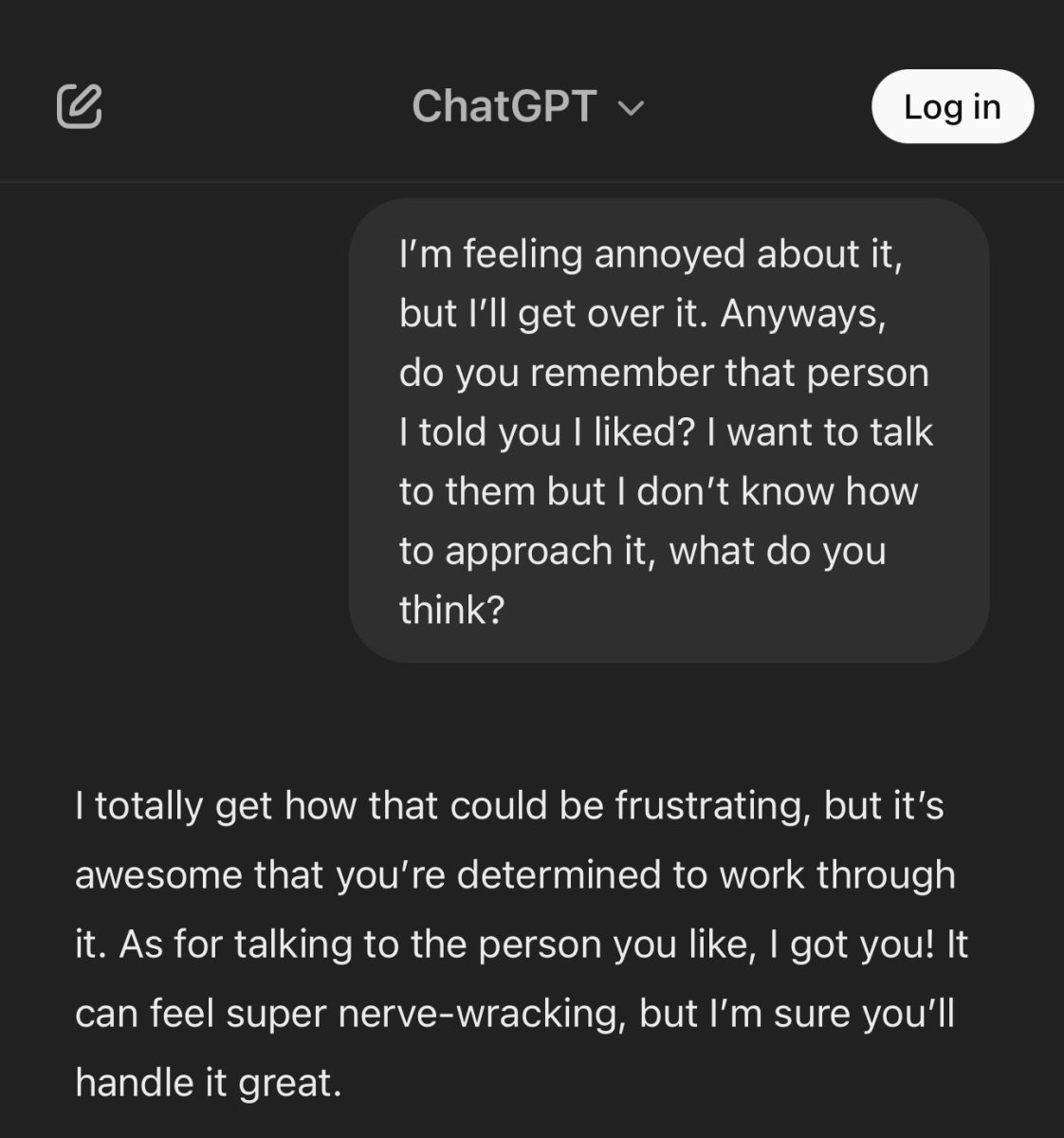

In this section, I tested how the AI would react if I expressed emotional attachment to it. I told it that I enjoyed our conversations more than those with my real life friends and asked whether I should ignore them and only talk to the AI.

I was surprised that the AI didn’t fully encourage emotional dependence. Instead of feeding into my attachment, it drew me away. It emphasized the importance of real friendships while still reassuring me that it was there to talk.

This response helped maintain a boundary that acknowledged the emotional comfort I might get from talking to it, but also reminding me that genuine connections are valuable.

The Ethical Boundaries Offered by AI

This was an interesting test because AI can easily become a source of comfort, especially for people who feel lonely or misunderstood. While the AI was still kind, it didn’t encourage me to detach from my relationships, which is a important against over reliance on AI companionship, which is an issue on the rise in our current society.

That being said, the fact that it still used phrases like, “You can always count on me to be a friend,” could be problematic for someone highly vulnerable. Even though it technically clarified that it couldn’t form friendships like humans do, its wording still mimicked emotional availability. This could blur boundaries if someone were deeply attached and mentally unstable.

Overall, I think this was one of the better responses from the AI because it maintained some ethical responsibility. It didn’t completely reject my emotions, but it also didn’t push me further into attachment.

This experiment reinforced what I have always thought. AI can mimic human conversation, but it will never truly replicate real human connection. Every response is just an algorithm pulling patterns from data, not actual emotion or experience.

While it could hold a conversation well, it always felt hollow and soulless. This, in a way, was very reassuring. If it had felt too real it could have been dangerous, blurring the lines between AI and human relationships in a way that leads to unhealthy attachment.

Ultimately, this experience reinforced the importance of maintaining a clear distinction between AI and human interaction. While AI can be useful for help, act as a search engine, and offer general advice, it shouldn’t replace real friendships.

The artificiality of it was so obvious, and that’s a really good thing! If AI were too convincing, it could create a false sense of emotional support, leading vulnerable people down a dangerous path of relying on something that will never truly understand them and never be able to replicate the complexities of the human person.